Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Leopold Aschenbrenner, who is an ex-openai wrote this book (free, online)

https://situational-awareness.ai/

Read it and discuss here? It's all over twitter now. He was on Dwarkesh's podcast recently.

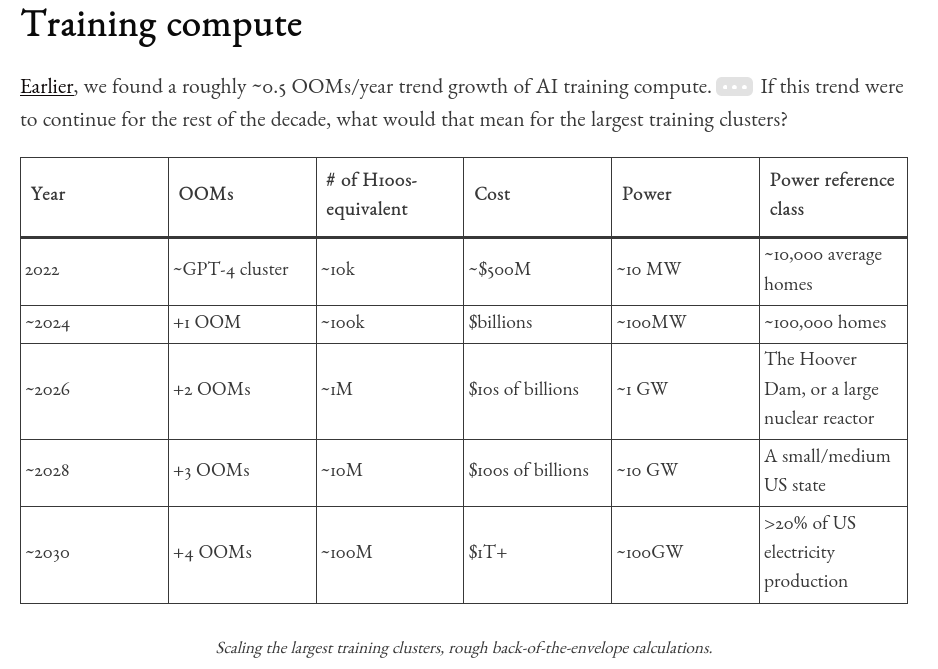

He seems to be predicting that once we build a cluster that costs about 100 billion dollars (which Microsoft & OpenAI are already planning) we will probably reach some form of AGI & a super intelligence takeoff.

Obviously, let's discuss what we are investing in. Drop ticker symbols. I would like to be positioned in large parts of the chip fab / suppliers / datacenter supply chain but just kind of waiting for a good for a good entry point. The stuff I list below I mostly only have small positions in.

Positions:

Chip makers: NVDA

Tooling Suppliers: AMAT, KLAC, ASML

AI product companies: META, GOOG, TSLA, MSFT

https://situational-awareness.ai/

Read it and discuss here? It's all over twitter now. He was on Dwarkesh's podcast recently.

He seems to be predicting that once we build a cluster that costs about 100 billion dollars (which Microsoft & OpenAI are already planning) we will probably reach some form of AGI & a super intelligence takeoff.

Obviously, let's discuss what we are investing in. Drop ticker symbols. I would like to be positioned in large parts of the chip fab / suppliers / datacenter supply chain but just kind of waiting for a good for a good entry point. The stuff I list below I mostly only have small positions in.

Positions:

Chip makers: NVDA

Tooling Suppliers: AMAT, KLAC, ASML

AI product companies: META, GOOG, TSLA, MSFT

-

bostonimproper

- Posts: 617

- Joined: Sun Jul 01, 2018 11:45 am

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Uranium mining? Huge energy demand.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Do you know if it's a 100 bil for one instance of superior intelligence? Or 100b to train, but then inference can run on cheaper clusters?

Also, does he address how they'll fix core issues that are still currently preventing AI for mass adoption (hallucinations, and not being able to discern valueable info from low-grade Internet bullshit during training). It doesn't seem like it can be fixed with just a larger cluster.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Dude really wants to ensure he keeps his equity. Anyone who used to work for OpenAI has to sell AI and AGI hard, because they have millions in equity for it, and if they badmouth it… OpenAI takes it all away. The level of grift here is ultra high, impossible to take seriously due to conflicts of interest.

This is a “sell the shovels while people are still interested” situation. You can still make a lot of money selling compute, arguing that it’s going to be the next big thing, selling that “AGI will be very soon”. If you have any level of credibility in the field, you should be cashing in on it to feed the delusion (remember, openAI employees who say bad things about openAI don’t get to pass go and collect their millions of dollars of equity). Any company that is selling wrappers on ChatGPT is idiotic (they have no moat) and are going to fail because any of the big players can eat their lunch at any time; but some of them are going to be producing the mundane tools that deliver actual value.

MSFT, GOOG, META are burning their goodwill to the average user at a breakneck pace. Google has messed up so bad, it’s trending about once a week on HN about people switching to different search engines. That was basically unthinkable 5 years ago. OpenAI is already moving to the endgame of all internet based services, I.e. “we need to stick a lot of ads in it”.

An absolutely amazing time we live in, where people can sell the hype of “this is gonna be the most coolest brilliant thing that ever existed” when the actual products tells you to put Elmer’s glue onto your pizza.

This is a “sell the shovels while people are still interested” situation. You can still make a lot of money selling compute, arguing that it’s going to be the next big thing, selling that “AGI will be very soon”. If you have any level of credibility in the field, you should be cashing in on it to feed the delusion (remember, openAI employees who say bad things about openAI don’t get to pass go and collect their millions of dollars of equity). Any company that is selling wrappers on ChatGPT is idiotic (they have no moat) and are going to fail because any of the big players can eat their lunch at any time; but some of them are going to be producing the mundane tools that deliver actual value.

MSFT, GOOG, META are burning their goodwill to the average user at a breakneck pace. Google has messed up so bad, it’s trending about once a week on HN about people switching to different search engines. That was basically unthinkable 5 years ago. OpenAI is already moving to the endgame of all internet based services, I.e. “we need to stick a lot of ads in it”.

An absolutely amazing time we live in, where people can sell the hype of “this is gonna be the most coolest brilliant thing that ever existed” when the actual products tells you to put Elmer’s glue onto your pizza.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Screenshot of table of the cluster information from the book for easier discussion. He's talking about the cost to build a cluster for the training run of very large models.zbigi wrote: ↑Thu Jun 06, 2024 7:41 amDo you know if it's a 100 bil for one instance of superior intelligence? Or 100b to train, but then inference can run on cheaper clusters?

Also, does he address how they'll fix core issues that are still currently preventing AI for mass adoption (hallucinations, and not being able to discern valueable info from low-grade Internet bullshit during training). It doesn't seem like it can be fixed with just a larger cluster.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

The author notes it takes too long to build nuclear for his expected 20%+ above current baseline electricity demand in the US this will cause by 2030. It will likely have to come from natural gas which we have domestically in excess supply and can quickly build power generation plants to utilize.

Nat gas producers I haven't really look into. Anyone else??

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Maybe catalogues of intellectual capital? I don't know to what extent the models are currently violating copyright law by being trained on works that are still under protection? The models can write rather intelligently about recent works, but maybe only due to all the reviews and summaries available on the internet. For extreme example, big difference between an AI offering an answer to question about whether or not Sumerian civilization invented the concept of zero based on interpreting and translating images of clay tablets vs. simply echoing or compare/constrasting the most relevant articles on Wikipedia and 5 other internet sites.

The author indicated that national security was another big upcoming issue, but I don't know what sort of play that would warrant. I suppose you could readily imagine a situation in which producers of conventional weaponry and similar defensive systems might profit through having to provide protection for AI centers.

The author indicated that national security was another big upcoming issue, but I don't know what sort of play that would warrant. I suppose you could readily imagine a situation in which producers of conventional weaponry and similar defensive systems might profit through having to provide protection for AI centers.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Will yall's power grid hold? Is there something into TX being on a separate, failing grid to the rest of the country that could give 2nd and 3rd order effects in the light of the rising energy demand?giskard wrote: ↑Thu Jun 06, 2024 9:51 amThe author notes it takes too long to build nuclear for his expected 20%+ above current baseline electricity demand in the US this will cause by 2030. It will likely have to come from natural gas which we have domestically in excess supply and can quickly build power generation plants to utilize.

Nat gas producers I haven't really look into. Anyone else??

- maskedslug

- Posts: 18

- Joined: Fri Feb 09, 2024 4:40 pm

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

I'm with Slevin on this one. AI made a big step forward in the past few years. I think much of the hype is working under the assumption that that kind of progress just continues linearly as you throw more compute at it. That seems fundamentally flawed to me for much the same reason 1,000 seagulls aren't collectively smarter than one human.

We've got some very impressive autocomplete technology that can, with increasing accuracy, approximate human writing (and speech) patterns. But these AI's are not *thinking*. They're not going to be able to solve novel programs. They can't know when they're just making things up.

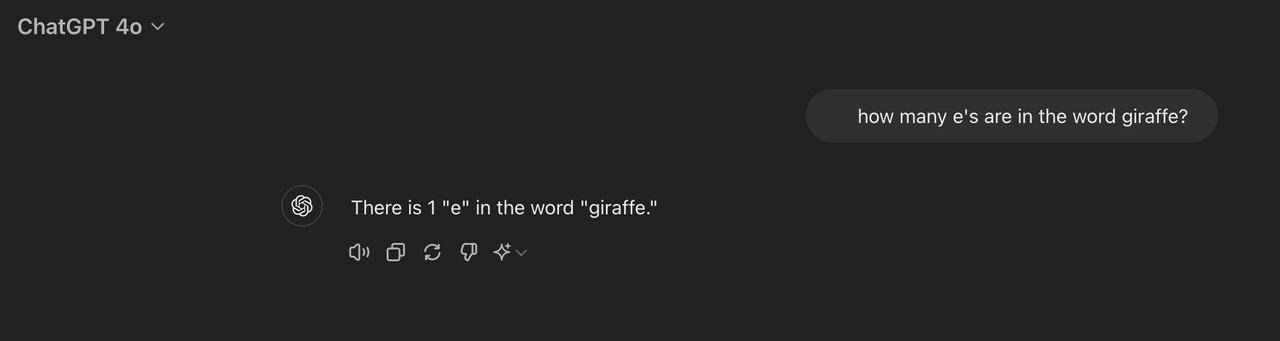

Here's a lovely GPT-4 conversation I just had:

Much of this AI hype reminds me of blockchain hype and self-driving car hype. Neither are *dead*. But reality and widespread predictions have diverged quite significantly. In 2016, Elon Musk was predicting that in 2 years, cars could drive themselves, unmanned completely, across country. 8 years later, someone making that claim today would be laughed out of the room. We know better now. I expect much the same happens for these AI predictions.

We've got some very impressive autocomplete technology that can, with increasing accuracy, approximate human writing (and speech) patterns. But these AI's are not *thinking*. They're not going to be able to solve novel programs. They can't know when they're just making things up.

Here's a lovely GPT-4 conversation I just had:

how many e's are in the word giraffe?

We're coming up on the 2 year anniversary of the first reveal of this super-impressive Chat-GPT. In the past 2 years, they've been able to make GPT regurgitate some rather impressive things! The author claims Chat-GPT is now as intelligent as a "smart high schooler". But it still can't count letters in a word you give it—something your average pre-schooler could do.The word "giraffe" contains 2 instances of the letter "e".

Much of this AI hype reminds me of blockchain hype and self-driving car hype. Neither are *dead*. But reality and widespread predictions have diverged quite significantly. In 2016, Elon Musk was predicting that in 2 years, cars could drive themselves, unmanned completely, across country. 8 years later, someone making that claim today would be laughed out of the room. We know better now. I expect much the same happens for these AI predictions.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

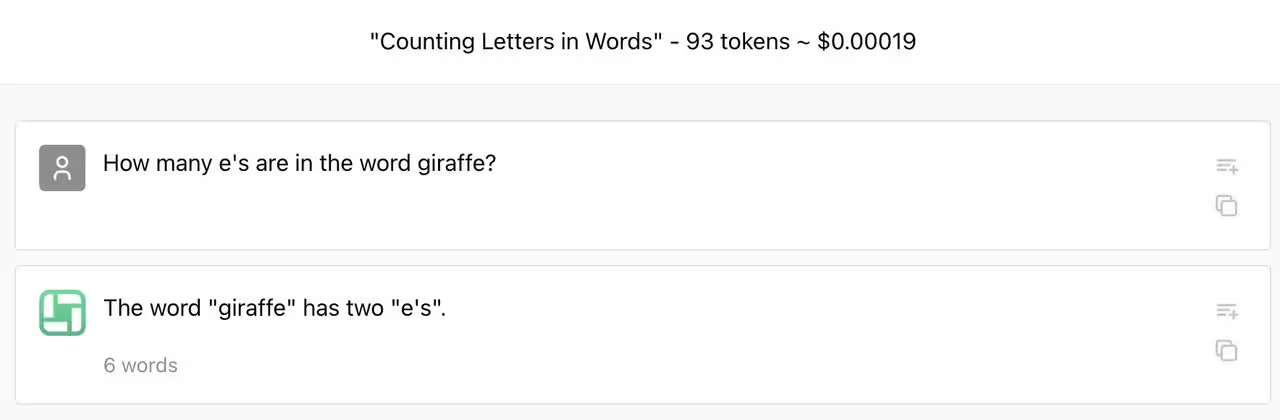

Are you trolling? Or were you not using GPT-4?maskedslug wrote: ↑Thu Jun 06, 2024 8:50 pm

We're coming up on the 2 year anniversary of the first reveal of this super-impressive Chat-GPT. In the past 2 years, they've been able to make GPT regurgitate some rather impressive things! The author claims Chat-GPT is now as intelligent as a "smart high schooler". But it still can't count letters in a word you give it—something your average pre-schooler could do.

Here is what I get:

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

I think there is an argument to be made that they will not get smarter than the source material they are trained on. But I'm not going to bet on it personally. It seems that if you linearly extrapolate they should surpass human problem solving ability on the order of magnitude scale up. Worst case, it won't be LLMs that do it and it could be a new architecture. Everyone is going multi-modal now so it's hard to say how that will evolve.maskedslug wrote: ↑Thu Jun 06, 2024 8:50 pm

We've got some very impressive autocomplete technology that can, with increasing accuracy, approximate human writing (and speech) patterns. But these AI's are not *thinking*. They're not going to be able to solve novel programs. They can't know when they're just making things up.

I do know a little bit about the transformer architecture and I believe they have a lot of levers to pull. There is a paper called "Attention is all you need" that introduced this type of model and if you read it I think you will see there are different ways to try to increase their capabilities.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

No different than Tulip mania IMO. There's always plenty of people who are easily blinded by greed, and plenty of operators trying to take advantage of them. The only times where that was not true was pre-capitalism I guess.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Nah, there were at least 3 people at any point of western/middle eastern history claiming to be the messiah at any point of time. One of my favorites was from Crete, he told everyone to come to a high cliff with all their wealth and belongings, and that he'll take them to jerusalem to start the golden messianic age etcetera. He was gonna do it like Moses: he was gonna part the sea for them. All they had to do is have faith and jump.

They jumped. Local fishermen saved some, but not many. And the messiah was nowhere to be seen, disappearing with their valuables and belongings.

In this particular case, I get the argument: if others start a bubble, why not ride it. I wish good luck to those who can - may yall do it successfully.

-

jacob

- Site Admin

- Posts: 17174

- Joined: Fri Jun 28, 2013 8:38 pm

- Location: USA, Zone 5b, Koppen Dfa, Elev. 620ft, Walkscore 77

- Contact:

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Well, if you must, you could build one or two giant 10-100GW nuclear plants within a decade. What holds them back (in the US) is NIMBY and the problem that they aren't that profitable. Power plants generally top out around 1GW but that is because that's about the amount of power they can distribute regionally before wasting it all on transmission losses further away, not because the laws of physics limits the size. In other words, you can't draw power for your 100GW by building power plants all around the country. They all have to be fairly close by. The way other power hungry applications, like particle accelerators, handle this is with big capacitor banks that can provide a temporary boost, but I suppose a data center has to stay on at all times.giskard wrote: ↑Thu Jun 06, 2024 9:51 amThe author notes it takes too long to build nuclear for his expected 20%+ above current baseline electricity demand in the US this will cause by 2030. It will likely have to come from natural gas which we have domestically in excess supply and can quickly build power generation plants to utilize.

Conversely, I don't think gas turbines scale in size, so now you're talking somewhere between a couple of hundred and a couple of thousand gas fired power plants concentrated regionally and the pipeline infrastructure to feed those.

Note, that US electric power generation has been trending sideways for many years as the economy has become more energy efficient with new construction serving to replace old construction, mainly coal.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

From what I understand is that we don't create quality data quick enough. The millions of boomers commenting on each other facebook posts will not push your model. I work with AI and we can throw away 80% of the data we receive. That number must be much higher for OpenAI

Here is a good critical piece written about the AI bubble.

https://www.wheresyoured.at/bubble-trou ... f%20GPT-4.

Here is a good critical piece written about the AI bubble.

https://www.wheresyoured.at/bubble-trou ... f%20GPT-4.

-

jacob

- Site Admin

- Posts: 17174

- Joined: Fri Jun 28, 2013 8:38 pm

- Location: USA, Zone 5b, Koppen Dfa, Elev. 620ft, Walkscore 77

- Contact:

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

This is also where I'm stuck with it. As far as I can tell the current form of AI perfectly replicates "the average person on the internet": histrionic, uninspiring, and not overly concerned with the facts. Personally, I can't tell the difference, so it passes the Turing test for me when it comes to the average internet human. However, if there is going to be an AI revolution, I think it requires more than emulating any number of internet-people with too much time on their hands. I was impressed that the latest models test above the 100 IQ level, but IIRC, the result was 101, which for all practical purposes is the same as asking random people random questions on reddit.

It seems to be that any singularity should be "training on itself". In other words, it should be thinking for itself, whereas what we have now is AI learning to think like the average luser. Ugh! I'll note that the average human brain uses 20W of power and can be hired to think for $5/hour. Lets make a fake-AI company, where we put 1000 humans in a sweatshop and pretend to be AI (yes, a certain big famous company that everybody knows already did that). I suspect it would be priced at a P/E>100.

Perhaps the most potential is not teaching AI to "talk like a human" but to understand complexity where human analytics fail. Apparently great strides have been made in weatherforecasting. This would create a new "science" to predict phenomena where "old science" has proven inadequate.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Categorically different. You could compare it to the asset bubble that formed around railroad stocks in the 1800s where everyone lost a lot of money if you wanted to be accurate.

Tulip mania comparison is unserious. I don't believe you are engaging with these ideas with an open mind.

- maskedslug

- Posts: 18

- Joined: Fri Feb 09, 2024 4:40 pm

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Looks like you're using GPT-4o, which might yield different results. My results came from the "GPT-4 (Limited Beta)" model. There is also a seed of randomness to these outputs. But no: not trolling. That is what I got back. And just tried again, and got the same:

I use GPT a handful of times every week. I find it genuinely useful in some scenarios. I'm just very skeptical of these AGI predictions.

Last edited by maskedslug on Fri Jun 07, 2024 8:54 am, edited 1 time in total.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

Its impossible for me to take seriously the opinions of a non-technical journalist who is an anit-tech doomer.bos wrote: ↑Fri Jun 07, 2024 7:44 amFrom what I understand is that we don't create quality data quick enough. The millions of boomers commenting on each other facebook posts will not push your model. I work with AI and we can throw away 80% of the data we receive. That number must be much higher for OpenAI

Here is a good critical piece written about the AI bubble.

https://www.wheresyoured.at/bubble-trou ... f%20GPT-4.

One of his articles is a takedown of Sora where he doesnt even mention the massive latency improvements. No it's just a negative rant about how much he hates marketing and calling people corrupt? Is this a joke? It's like he's in another dimension. The product is real they give you APIs you can use, I'm not sure what "grift" is lmao.

Re: Ex-openai employee predicting agi in 3 years. Read his book and give thoughts?

I'm not sure what limited beta is, but its not the output I get with ChatGPT interface on 4 or 4-o.maskedslug wrote: ↑Fri Jun 07, 2024 8:50 amLooks like you're using GPT-4o, which might yield different results. My results came from the "GPT-4 (Limited Beta)" model. There is also a seed of randomness to these outputs. But no: not trolling. That is what I got back. And just tried again, and got the same:

I use GPT a handful of times every week. I find it genuinely useful in some scenarios. I'm just very skeptical of these AGI predictions.

The way I am able to reproduce what you are seeing is by using 3.5-turbo which is significantly behind gpt-4 in terms of capabilities.

I think it's possible whatever app you are using is not using the actual 4 model or something, or maybe its using some deprecated API for the older versions of 4.