For those who can't be f*ed to click, the idea is:

1. Build a macro model

2. See what the market is pricing in

3. On the basis of the macro model + an analysis of current and historical cross-asset correlation + your own subjective assessment, decide what is mispriced.

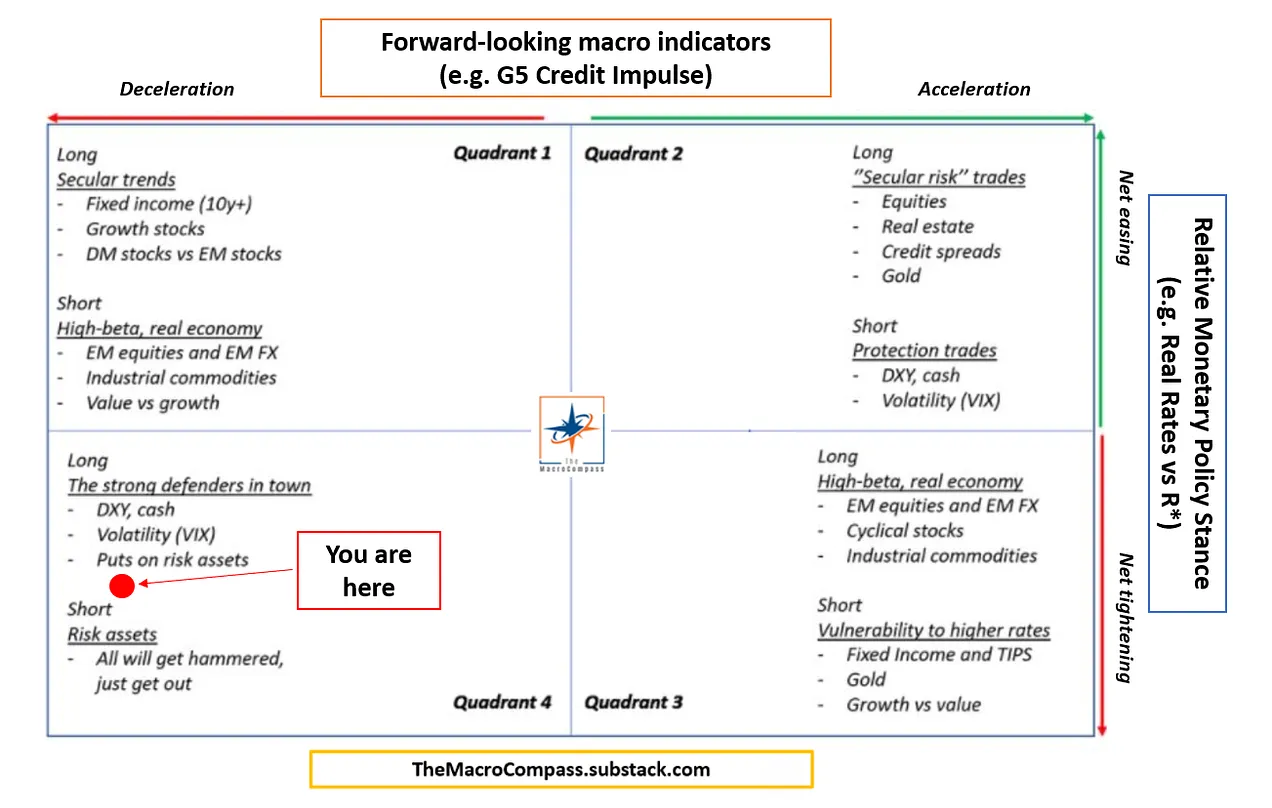

The macro model in the example is this:

The cross-correlation table in the example is this:

It is clear that replicating the above involves both macroeconomic knowledge and technical knowledge. I am assuming that one would write code that would pull certain publicly available data from various sources and one would then have an application that analyzes this information and produces the spreadsheet or graphic that is needed.

While I don't walk around with financial formulas in my head, the macro/finance knowledge isn't the limiting factor for me. I am fairly confident that I have an alright-ish baseline understanding of finance and macro on which to build should I decide to go look up the formula for "volativity adjusted cross-asset correlation." I assume any averagely intelligent monkey with an econ degree can accomplish this, and I am an averagely intelligent monkey with an econ degree.

I am, however, completely at sea when it comes to the IT aspect of this. What IT skills does one need to pull this off?

I assume that as a solitary muggle, I would not be able to replicate the full, detailed work of a professional whose goal is, essentially, to build these tools then make them available to muggles for subscription. I assume he has at least one actual quant/programmer working for him as well. So I am aware that what I build will be unlikely to match these tools in their full sophistication. I am also aware that I'm not likely to generate any significant advantage by building them because everyone and their mom can build them with a bit of IT/econ training. But I'm still curious to make inroads into developing a simplified version of some of the above.

What should I look into learning? Also, will some of this be already developed and available on, say, github?